Microsoft recommends the industry standard Kimball approach for DW/BI solutions. I have put in some Microsoft white papers below to get you started. I am assuming as more ERP consultants play part in DW/BI solutions, we will see more people learning and mastering the Kimball approach.

As I wrote in my previous post, most of it is common sense – to do this more efficiently, and to deliver predictable and useful results.

Common Challenges with BI/Reporting Solutions for Dynamics GP

Most seasoned consultants have techniques supplemented by formal project management to address these issues. At the same time, most of you would have faced some of these -

- We need access to data from our old system, and make consolidated statements using data from Dynamics GP

- Business Users are trying to analyse the data, but the GP database is “too complicated”

- Integration stopped working because something was changed from the source system.

- Data maintained at multiple places in multiple systems – example – Sales department maintains list of customers in excel, marketing in their custom application, and Accounting in Dynamics GP. This causes multiple customer lists, out of sync with each other.

- Data from an integrating system updated the data in GP, and now our reports for last month have changed

- XYZ Report is running slowly.

- Data from integrating system was imported around 12 AM, and half the transactions were imported in Day 1, and half in Day 2

- The integration has been failing for one week, and nobody realized

- Our in-house integration expert has left and now nobody knows how to fix an issue with the interface!

- The integration takes very long to run every night

The Kimball approach has some guidelines which help you avoid all of these common issues. I would highly recommend reading the following whitepapers from Microsoft and ofcourse the Kimball books

- Scaling Up Your Data Warehouse with SQL Server 2008 R2

- Implementing a Microsoft SQL Server Parallel Data Warehouse Using the Kimball Approach

- Best Practices for Data Warehousing with SQL Server 2008 R2

- The Book on - Kimball’s approach

Kimball Life Cycle

Core Principles of Kimball Approach

- Understand business requirements so you can engage the business, prioritize your efforts, and deliver business value.

- Design the data warehouse data sets for flexibility, usability, and performance.

- Build and deliver quick, business process-based increments within an enterprise data framework known as the data warehouse bus matrix.

- Design and build a DW/BI system architecture based on your business requirements, data volumes, and IT systems environment.

- Build out the extract, transformation, and loading (ETL) system with standard components to deal with common design patterns found in the analytic data environment.

- Provide the complete solution, including reports, query tools, applications, portals, documentation, training, and support.

The Kimball approach starts with understanding business requirements for a data warehousing/Business intelligence solution. The ideal starting point for most organizations is to perform an initial set of interviews to gather and prioritize enterprise-wide high level business requirements for information. The result is a priority ordered list of business processes that generate data, along with high value analytic opportunities supported by that data.

Once the list of business processes and associated opportunities has been identified and prioritized, the next step is to take the highest priority business process and gather detailed business requirements related to it. This second pass at requirements is much more focused on understanding the specifics around the required data source, including attributes, definitions, business rules, data quality, and the range of analytics and applications that will be built on top of this data set.

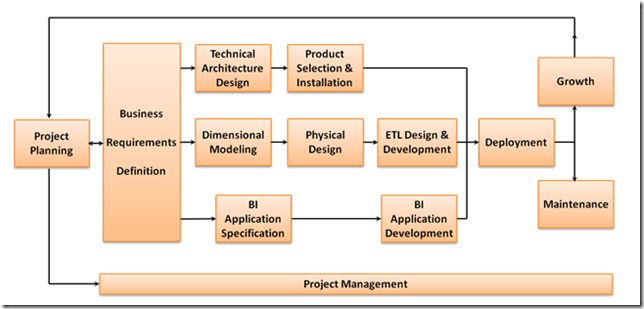

Once these detailed requirements are in place, the Lifecycle moves into the implementation phase beginning with design steps across three different tracks. The top track in Figure 1 is the technology track – in case of Dynamics GP – we have wide variety of options

- eConnect/SSIS/Web Services/SmartConnect for the ETL Layer

- SSAS/SSRS/Business Objects/SmartList Builder/PowerPivot/ 3rd party tools like BI 360 for the BI Layer

The middle track in Figure 1 is the data track. The initial step is to define the logical data model needed to support the analytic requirements. In the Kimball approach, this is a dimensional model. Once the logical model is in place, the team can build the target database in the database environment. The nature of the physical model depends on the target platform. Many database products work best with a physical dimensional model, although a more normalized model may make sense on a few platforms.

The last data step is to create the ETL system that will populate the target database as required. The ETL system is a significant effort, often consuming a majority of the initial project resources.

- In Dynamics GP – you will often be using an interface like eConnect/SmartConnect/Web Services for importing data (to meet the business rules for Dynamics)

- You will be using one of the tools above OR SSIS for exporting the data

The bottom track in Figure 1 is concerned with the BI applications: the initial set of reports and analyses that will deliver business value to the organization. This track is split into two steps; the first is the design step where a small set of high value applications and reports are identified and specified in detail. The second step is the actual implementation where these applications and reports are built. This step often has to wait until near the end of the ETL development when data is actually available in the database. Note that these reports and analyses only serve as a starting point that helps solve a high-value problem. The dimensional model is not limited in any way to this subset of reports.

Once the three implementation tracks are complete, the Lifecycle comes back together to deploy the query tools, reports, and applications to the user community. This involves extensive communication, training, documentation, and support.

The next Lifecycle iteration usually begins during the deployment of the previous iteration, when the business analysts and designers can gather detailed requirements for the next highest priority business process, create the associated dimensional model, and start the process all over again. The Lifecycle’s incremental approach is a fundamental element that delivers business value in a short timeframe, while building a long-term, enterprise information resource.

Some concepts from the Kimball Approach

Star Schema

Star Schema is the recommended dimensional model in the Kimball Approach. The numeric measurements ("facts") of a business process are concentrated in the central fact table, and the context of the measurement is represented as a set of denormalized dimension tables, which surround the fact table. They keys that implement the joins between the dimension tables and the fact table should be anonymous integer keys. These are called surrogate keys.

SQL Server 2008 has built-in performance optimizations that leverage the dimensional model (search the web for “star join optimization” for more information on this).

Having a dimensional model sitting on top of Dynamics GP Databases makes it incredibly more easy for users to understand in comparison with the thousands of SQL Server tables.

- Having a Dimensional model for Dynamics GP really helps in reporting/business users understand and use the data

Dynamics GP has a highly normalized database, which is great from a transaction processing perspective. Flexibility in terms of a Data Warehouse means – it should be flexible for different reporting requirements.

- With a base dimensional model with facts and dimensions – users can built multiple reports

Flexibility comes in part from the level of detail captured in the model. A strong design goal in the dimensional model is to always capture data at the lowest level of detail available, called the atomic level. The presence of atomic-level data allows users to roll the data up to any level of summarization required. Any aggregation prior to inclusion in the enterprise data warehouse means some detail will not be available, thus reducing flexibility.

The dimensional model keeps the atomic-level fact tables in their normalized form (by normalizing the dimension tables out of the fact table) for smaller size and better performance, but keeps each dimension in denormalized (flat) form. Note that such flat dimension tables contain exactly the same information as fully normalized (snowflaked) dimension tables but do not implement the separate tables and extra keys required to complete the normalization process. The dimensional model simplifies the physical design by dramatically reducing the number of tables and joins required for a given analytic query, which improves performance on most market leading database products running on single servers.

- Using data from a DW like SSAS reduces the load on GP Databases

- Additionally, as the SSAS cube is built for reporting, with a highly denormalized structure – you need lesser joins

I discussed some performance statistics in my previous post.

Conformed Dimensions and Enterprise Bus Matrix

Take a look at a sample bus matrix below - the row headers down the left side define the organization's primary business processes. The column headers of the bus matrix represent the primary objects that participate in those business processes. Typical examples include customer, account, product, store, employee, patient, and date. These objects are called dimensions, and they must be pre-integrated to work with all the relevant business processes.

This pre-integration is called conforming, and it involves the hard organizational data governance work of deciding the standard names, descriptions, mappings, hierarchies, and business rules that will apply across the DW/BI system.

Once this definitional work is done, these dimensions become reusable components that can be applied to every associated business process. Most importantly, the conformed dimensions are the necessary framework for integration, where the results from two or more business process can be combined into a single BI deliverable.

Slowly Changing Dimensions

Every analytic data store must provide a means to accurately track dimension attributes as they change over time. Tracking attribute changes allows the business to report on the state of the world as it was at any point in time, answering question like “What were sales by territory as of December 31st last year?” It also supports accurate causal analysis by associating the attribute values that were in effect when an event occurred with the event itself. For example, what postal code did a customer live in when they bought a certain product two years ago?

The most efficient way to capture these changes from both an ease of use and performance perspectives is to add a row to the dimension whenever an attribute changes by assigning a new surrogate key and capturing the effective date and end date for each row. These are commonly referred to as slowly changing Type 2 dimensions. You can see these control columns below.

While tracking attribute changes over time places a burden on the ETL process, it improves performance for user queries because the joins between the facts and dimensions are simple equijoins on integer keys. This also improves ease of use because the BI semantic layer does not have to handle more complex, multi-column unequal joins in order to retrieve the correct dimension row for any given historical fact event.

Tracking changes over time is a mandatory business requirement, regardless of the underlying data model you use. It is possible to track changes in a normalized model, but the complexity of keeping multiple versions across dozens of tables associated with a single dimension is much greater than dealing with changes in a single, denormalized dimension table.

Naturally this is a satellite view to the Kimball Approach – there are multiple books over 800 pages each, difficult to summarize in one post.

This was part 2 of this series.

- Overview of Kimball Approach for a scalable BI solution for Dynamics GP

- Kimball Approach for gathering BI requirements

- Master Data Management with Dynamics GP

- Building a robust ETL (Extract Transform Load) system for initial and ongoing load into Dynamics GP

- Building a Data warehouse for Dynamics GP (following best practises)

- Building a BI solution for Dynamics GP (following best practises)

- Reviewing the existing DW/BI toolset for Dynamics GP

- Analysis Cubes Module for Microsoft Dynamics GP (how it implements parts of Kimball approach)

- Latency for Dynamics GP BI (real time vs next day)

- Data Mining for Dynamics GP

- Security and Metadata for a DW/BI solution

1 comment:

With over two decades of experience, you can rest assured that you are in safe hands with the team here at Warehousestoragesupplies.co.uk. We take the time to fully understand your requirements so we can deliver the warehouse solutions you need for your organisation. We have a fantastic range of warehouse supplies available for you to order online and we have ensured the process is a straightforward one.With experience in both commercial and warehouse storage solutions, we source and supply a full range of products to meet with your requirements. This includes everything from shelving solutions through to storage cages and pallet trucks. warehouse racking systems

Post a Comment