Over the last few years we have seen a wide variety of BI solutions for Dynamics GP. As we see more and more mid to large sized Dynamics GP Implementations (40 to 100+ users) – having robust DW/BI (Data Warehousing/Business Intelligence) solutions is becoming a necessity. You can have excellent BI tools ( SSRS / BI 360 /Smartlist Builder / Crystal / Excel Refreshable reports) – but you need a scalable solution that works for a large user base.

As it is with most processes, there are best practices to achieve your goals. When I was programming, I really enjoyed reading about and implementing Design Patterns and Refactorings – that changed programming for me. Implementing these common sense techniques saved me time, and helped deliver robust solutions.

There are similar best practises for Data Warehousing and Business Intelligence. Kimball’s approach is very popular – it’s always been highly recommended in the DW/BI world. My aim with this post and the next few posts is to introduce you to the Kimball approach, and associate how it ties up with Dynamics GP Implementations. However, first lets take a look at OLAP – and where that fits into the GP world.

If you look at the system requirements for Dynamics GP – you will see that

- For 20-60 users – Microsoft recommends - Perform some online analytical processing (OLAP) cube generation

- For 60 – 100 users – Microsoft recommends – Perform online analytical processing (OLAP) cube generation (to different machine)

Microsoft provides a module – Analysis Cubes – which implements most best practices and can help significantly with performance. At the same time, its important to understand what goes behind the scenes, as you would often be integrating with multiple systems – and have custom requirements.

So what are the advantages of reporting from a dimensional OLAP database like SSAS vs. directly reporting from Dynamics GP SQL Database ?

1. OLAP database technology is optimized for querying and reporting, instead of processing transactions.

The Dynamics GP Database is designed as a highly normalized OLTP database. There are lots of small tables with relationships defined between them. This is good for additions, deletions, and modifications of a field, which can be made in just one table and then propagated through the rest of the database via the defined relationships. However, if you have written reports for GP – you would know it often involves creating multiple joins. This is fine for smaller implementations, but as the number of users and transactions increase – can lead to performance bottlenecks.

Here is a simple SQL view (Sales by Item by Month – by Victoria Yudin)

1:

2:

3: SELECT

4: D.ITEMNMBR Item_Number,

5: D.Item_Description,

6: D.Generic_Description,

7: D.Item_Class,

8: D.User_Category_1,

9: sum(case when month(D.DOCDATE) = 1 then D.SALES else 0 end) as [Jan_Sales],

10: sum(case when month(D.DOCDATE) = 2 then D.SALES else 0 end) as [Feb_Sales],

11: sum(case when month(D.DOCDATE) = 3 then D.SALES else 0 end) as [Mar_Sales],

12: sum(case when month(D.DOCDATE) = 4 then D.SALES else 0 end) as [Apr_Sales],

13: sum(case when month(D.DOCDATE) = 5 then D.SALES else 0 end) as [May_Sales],

14: sum(case when month(D.DOCDATE) = 6 then D.SALES else 0 end) as [Jun_Sales],

15: sum(case when month(D.DOCDATE) = 7 then D.SALES else 0 end) as [Jul_Sales],

16: sum(case when month(D.DOCDATE) = 8 then D.SALES else 0 end) as [Aug_Sales],

17: sum(case when month(D.DOCDATE) = 9 then D.SALES else 0 end) as [Sep_Sales],

18: sum(case when month(D.DOCDATE) = 10 then D.SALES else 0 end) as [Oct_Sales],

19: sum(case when month(D.DOCDATE) = 11 then D.SALES else 0 end) as [Nov_Sales],

20: sum(case when month(D.DOCDATE) = 12 then D.SALES else 0 end) as [Dec_Sales]

21:

22: FROM

23: (SELECT SH.DOCDATE, SD.ITEMNMBR,

24: coalesce(I.ITEMDESC, SD.ITEMDESC) Item_Description,

25: coalesce(I.ITMGEDSC, '') Generic_Description,

26: coalesce(I.ITMCLSCD,'') Item_Class,

27: coalesce(I.USCATVLS_1,'') User_Category_1,

28: CASE SD.SOPTYPE

29: WHEN 3 THEN SD.XTNDPRCE

30: WHEN 4 THEN SD.XTNDPRCE*-1

31: END SALES

32: FROM SOP30200 SH

33: INNER JOIN

34: SOP30300 SD

35: ON SD.SOPNUMBE = SH.SOPNUMBE

36: AND SD.SOPTYPE = SH.SOPTYPE

37: LEFT OUTER JOIN

38: IV00101 I

39: ON I.ITEMNMBR = SD.ITEMNMBR

40: WHERE SH.VOIDSTTS = 0

41: AND SH.SOPTYPE IN (3,4)

42: AND SD.XTNDPRCE <> 0

43: AND SD.ITEMNMBR not like 'XXXXXXXXXXXXXXX%'

44: AND year(SH.DOCDATE) = 2017 --change year as needed

45: ) D

46:

47: GROUP BY D.ITEMNMBR, D.Item_Description, D.Generic_Description, D.Item_Class,

48: D.User_Category_1

49:

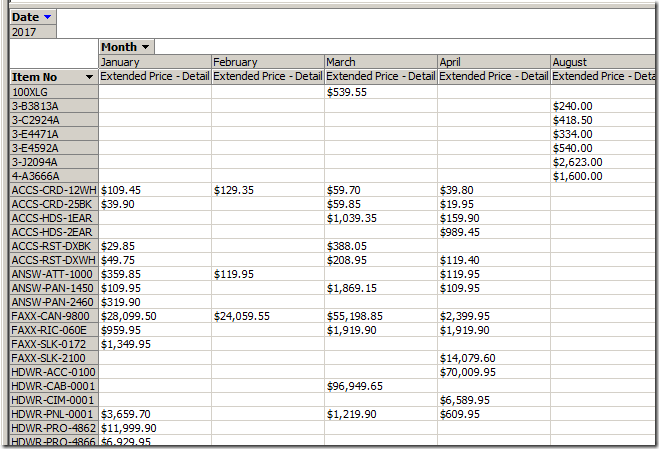

Here are the results from Fabrikam

Its getting data from multiple tables, and aggregating data on the fly. If we look at this query in the profiler – it takes about 57 ms to execute.

A OLAP system like SQL Server Analysis services, is designed for reporting and analysis. There are pre-processed aggregations, and proactive caching which helps in generating report in a fraction of a time that it would normally take.

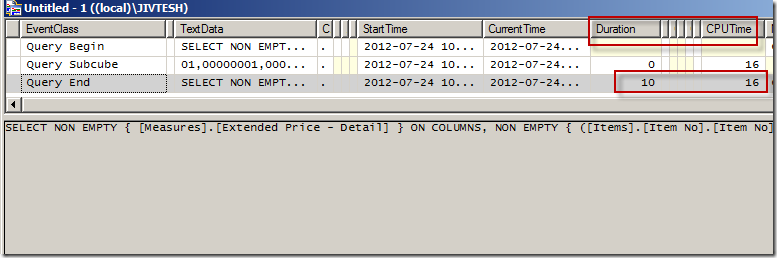

Here is the corresponding MDX (query language for SSAS – you can often get away with the generated MDX from the designer and Excel power pivot)

1: SELECT NON EMPTY { [Measures].[Extended Price - Detail] } ON COLUMNS,

2: NON EMPTY

3: {

4: ( [Items].[Item No].[Item No].AllMembers * [Master Date].[Month].[Month].AllMembers )

5: } Dimension Properties MEMBER_CAPTION,

6: MEMBER_UNIQUE_NAME ON ROWS

7: FROM (

8: SELECT ( { [Master Date].[Year].[All Date].[2016] } ) ON COLUMNS

9: FROM [Sales]

10: )

11: WHERE ( [Master Date].[Year].[All Date].[2016] ) CELL Properties Value,

12: BACK_COLOR,

13: FORE_COLOR,

14: FORMATTED_VALUE,

15: FORMAT_STRING,

16: FONT_NAME,

17: FONT_SIZE,

18: FONT_FLAGS

Here is the result output

SSAS has pre-calculated measures

If you look at this MDX query being executed in profiler, it takes about 10 ms to execute.

As you can see for the same result output SSAS delivers a much better performance, as the SSAS database is built for Analysis and reporting.

2. Analysis Cubes for Dynamics GP can serve as an easier reporting platform for end users.

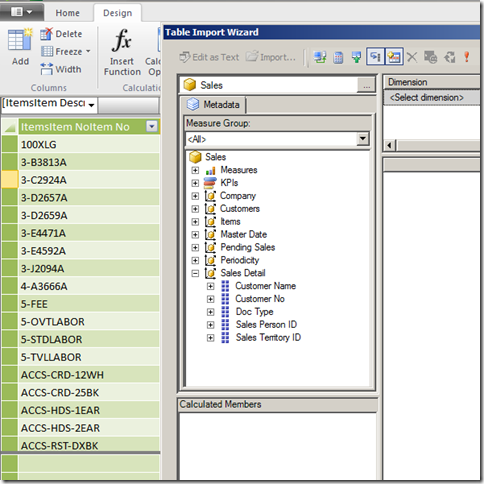

Dynamics GP with its complex Database structure can be a nighmare for end users to report on. With user friendly dimensions and measures, end users can use tools like SSRS and Excel to report on data.

Consider end users digging data from the Dynamics GP database versus

Users digging data from well defined dimensional model in SSAS (accessed from within Excel easily)

3. Users can view data in Excel, without requiring access to Dynamics GP.

For example, Analysis Cubes can be used to view all the checks that have cleared the bank, or the total purchases made by a customer, and these Excel users do not need access to Microsoft Dynamics GP. Information from Dynamics GP Analysis Cubes can be viewed in pivot tables in Microsoft Excel. Analysis Cubes can be used to spot trends by producing analytical views of sales information, buying patterns, and other values.

Hopefully, if you have read this far, I have you interested in why this is something you need to be considering for your growing BI requirements.

UPDATE : The new licensing for Dynamics GP seems to have Analysis Cubes in starter pack, which would mean it would be even more lucrative to implement this for your Dynamics GP implementation.

Over the next few posts I am aiming to look at

- Overview of Kimball Approach for a scalable BI solution for Dynamics GP

- Kimball Approach for gathering BI requirements

- Master Data Management with Dynamics GP

- Building a robust ETL (Extract Transform Load) system for initial and ongoing load into Dynamics GP

- Building a Data warehouse for Dynamics GP (following best practises)

- Building a BI solution for Dynamics GP (following best practises)

- Reviewing the existing DW/BI toolset for Dynamics GP

- Analysis Cubes Module for Microsoft Dynamics GP (how it implements parts of Kimball approach)

- Latency for Dynamics GP BI (real time vs next day)

- Data Mining for Dynamics GP

- Security and Metadata for a DW/BI solution

2 comments:

Very nice and informative article.

Shabnam

It’s hard to search out educated folks about this subject, however everyone appear to be you know exactly what you’re speaking related to! Thanks..

Dynamics GP Training

Post a Comment